Segmentation & object detection by color.

In this tutorial i go to explain how to image segmentation or detect objects byred color, in this case by red color.

This task is simple, but there are some things we must known.

Now i go to explain and get a demo code for segmentation, how to determine if each image pixel is red or no, and then, i go to explain how we can detect object, it's similar but with diferent concept.

In first moment, we can decide get the red channel of image and get the values, if it's a higher value then we have a red pixel otherwise no.

This is incorrect. Why?, imagine we have a pixel and in his red channel have a 255 value, this is the higher value, then we decide this is a red pixel, but if in green and blue channel have 255 value too then we have a white pixel instead a red pixel. Then how we determine if a pixel is red or no?

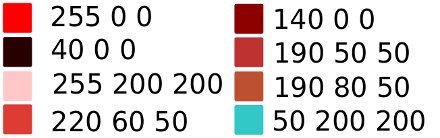

Ok, we go to get some color values and determine how we decide if is red or no.

Then we can decide that higher red value, and lower values of blue and green can be defined as red color. Moreover the green and blue values don't have a higher difference between his values.

We go to construct simple graph with some values to see better.

We see in this graph that the red value is higher than a max value and there are a min value for green and blue.

The min and max we go to name as blue green threshold and red threshold (bg_threshold, r_threshold).

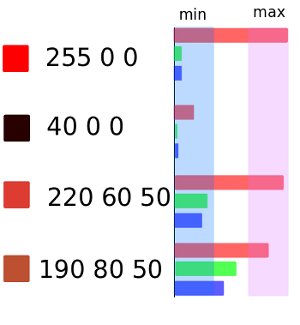

This code compute for each pixel if it's red or no, and create a black/white image, this is our segmentation image, and with this image we can use to label each object, we use a cvFindContours to retreive the contours of each object detected on our image.

for (y = 0; yheight; y++) {

red = ((uchar*)(img->imageData + img->widthStep * y))[x * 3 + 2];

green = ((uchar*)(img->imageData + img->widthStep * y))[x * 3 + 1];

blue = ((uchar*)(img->imageData + img->widthStep * y))[x * 3];

uchar* temp_ptr = &((uchar*)(img_result_threshold->imageData + img_result_threshold->widthStep * y))[x];

if ((red > threshold) && (green < BG_threshold) && (blue < BG_threshold) && (abs(green - blue) < BG_diff)) {

temp_ptr[0] = 255; //White to greater of threshold

}

else {

temp_ptr[0] = 0; //Black other

}

}

cvMorphologyEx(img_result_threshold, img_morph, img_temp, NULL, CV_MOP_CLOSE, 6);

cvNamedWindow("Threshold", 1);

cvShowImage("Threshold", img_morph);

cvFindContours(img_morph, storage, &contour, sizeof(CvContour), CV_RETR_CCOMP, CV_CHAIN_APPROX_SIMPLE, cvPoint(0, 0));

for (; contour != 0; contour = contour->h_next) {

CvScalar color = CV_RGB(rand() & 255, rand() & 255, rand() & 255);

cvDrawContours(img, contour, color, color, -1, 3, 8, cvPoint(0, 0));

}And this is the result, whe can see in the image a color border with detected red objects, the system detect two objects. The notebook and the notebook corner

This is perfect to image segmentation by color, but imagine we have objects ofone image, and we want get each object and know if this object have a predominant red color or no.

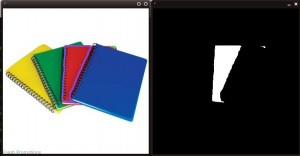

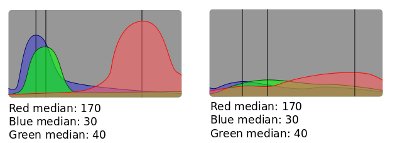

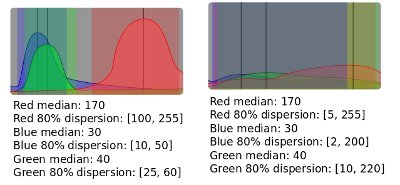

The theory is same but applied to the object histogram, and use the median to determine the thresholds

But with this we don't have enough, because we can get a gray image as we can see in the second histogram, then we need use another parameter more, the dispersion of each channel.

We can see that in this first histogram we can ensure this object have a predominant red, and in the second histogram no.